Columbia, Mo. — A web-based tool known as Deeptector.io, which harnesses artificial intelligence to detect synthetic or deepfake videos and images made with AI, won the 2019-20 Missouri School of Journalism’s Donald W. Reynolds Journalism Institute student innovation competition and a $10,000 prize.

Team Deeptector.io (left to right): Caleb Heinzman, Ashlyn O’Hara, Kolton Speer and Imad Toubal.

Defakify won second place and $2,500, while Fake Lab received $1,000 for third place. Deep Scholars also participated, but did not place in the competition.

Each year the RJI student innovation competition asked students to come up with prototypes, products, or tools that could potentially solve a journalism challenge. This year’s challenge, open to college students across the country, tasked teams with developing tools to help verify photos, videos or audio content to help the industry fight against deepfakes and fabricated content.

“I was impressed that all teams really got to the crux of the problem but then took different paths to get to a potential solution,” said Randy Picht, executive director of RJI. “It’s just the latest example of why it’s a good idea to get students into the problem-solving arena.”

Deepfakes are audio, photo and video files that have been manipulated to make them look or sound real. This can include swapping a face to another person’s body or transferring someone’s facial movements to another person’s face.

The winning team’s product, Deeptector.io, allows users to simply drag and drop a video file, or YouTube link, into their cloud-based system and the content is then fed through a deep learning algorithm that has been trained to detect differences between fake and real media content. The algorithm then produces a prediction for users.

The web tool was created by a team from the University of Missouri made up of graduate student Caleb Heinzmann, computer science; senior Ashlyn O’Hara, data journalism; graduate student Kolton Speer, computer science; and graduate student Imad Toubal, computer science.

“I think the biggest thing I took away [from competing] is that there needs to be partnerships between the work journalists are doing and the work that people in the tech field or world of tech are doing,” O’Hara said. “As we mentioned in our presentation, the world is becoming more digitally focused and I think there just needs to be better communication of how people, who are already in this technological field, can help the needs of 21st century journalists.”

The judging

Teams received 20 minutes to pitch their products to industry judges and then fielded questions from the audience and judges. This year’s judges were Lea Suzuki, photojournalist at the San Francisco Chronicle; Nicholas Diakopoulos, director of the computational journalism lab at Northwestern University; Elite Truong, deputy editor of strategic initiatives at The Washington Post; and Jason Rosenbaum, political correspondent at St. Louis Public Radio.

“Based on the judging criteria, which all the teams were also aware of, what it really came down to is whether it’s something that journalists would use,” said Truong of the judges’ first place decision. “The winner solves a problem that journalists currently face. You can make a really fantastic app and use a lot of awesome technology to do great things. But, it really does come down to, do you know your user? Have you done research on them? Are you solving a problem that they face?”

The other teams that competed and placed in the competition were:

Defakify — second place

Team Defakify (left to right): Vijay Walunj, Gharib Gharibi and Anurag Thantharate. NOT PICTURED: Reese Bentzinger.

Team Defakify also uses a subset of AI algorithms called Deep Learning to detect fake images and videos. The team provided an application program interface for companies to bulk test videos and pictures and created the tool so it could be embedded on social media platforms for instance.

The team is made up of senior Reese Bentzinger, communications with journalism emphasis; graduate student Gharib Gharibi, computer science; graduate student Vijay Walunj, computer science; and graduate student Anurag Thantharate, computer networking and communication systems, all from University of Missouri-Kansas City.

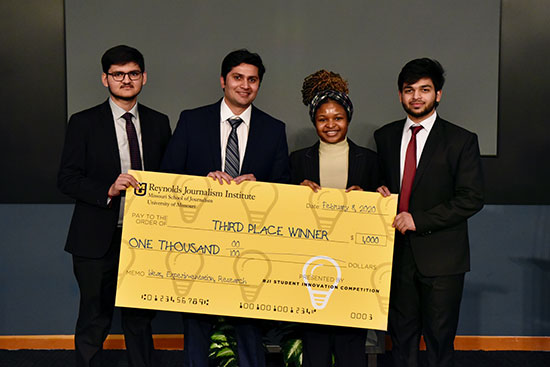

Fake Lab — third place

Team FakeLab (left to right): Ashish Pant, Raju Nekadi, Lena Otiankouya and Dhairya Chandra.

Team Fake Lab also developed a web-based tool that allows users to upload audio and video files to verify if they’re real or not. The tool utilizes the Deep Learning algorithm and was trained with more than 40,000 images to help it verify deepfakes.

The UMKC team consisted of graduate student Raju Nekadi, computer science; graduate student Dhairya Chandra, computer science; Lena Otiankouya, communications; and undergraduate student Ashish Pant, computer science.

Deep Scholars — fourth place

The fourth team that participated, also from UMKC, was Deep Scholars. Their tool focused on audio and developed a solution for voice fraud. Deep Scholars extracts data from the files into a spectrogram, which can show when tones fluctuate in a human’s voice versus the single tone of a machine, for example. The tool deciphers whether or not audio is real or fake through a Deep Learning algorithm.

Team members are graduate student Zeenat Tariq, computer science; graduate student Sayed Khushal Shah, computer science; graduate student Vijaya Yeruva, computer science; and senior Frank Burnside, film and media studies.

Reviewed 2020-02-13